Comparison of United Kingdom and United States screening criteria for detecting retinopathy of prematurity in Hong Kong

Hong Kong Med J 2023 Aug;29(4):330–6 | Epub 21 Jul 2023

© Hong Kong Academy of Medicine. CC BY-NC-ND 4.0

ORIGINAL ARTICLE

Comparison of United Kingdom and United States screening criteria for detecting retinopathy of prematurity in Hong Kong

Lawrence PL Iu, FHKAM (Ophthalmology), MPH (HK)1; Wilson WK Yip, FHKAM (Ophthalmology)1; Julie YC Lok, FHKAM (Ophthalmology)1; Mary Ho, FHKAM (Ophthalmology)1; Leanne TY Cheung, MB, BS2; Tania HM Wu, MB, BS3; Alvin L Young, FHKAM (Ophthalmology), FRCOphth1

1 Department of Ophthalmology and Visual Sciences, Prince of Wales Hospital, Hong Kong SAR, China

2 Department of Ophthalmology, Tung Wah Eastern Hospital, Hong Kong SAR, China

3 Department of Paediatrics and Adolescent Medicine, Pamela Youde Nethersole Eastern Hospital, Hong Kong SAR, China

Corresponding author: Dr Lawrence PL Iu (dr.lawrenceiu@gmail.com)

Abstract

Introduction: We examined whether the United

Kingdom (UK) or the United States (US) screening

criteria are more appropriate for retinopathy of

prematurity (ROP) screening in Hong Kong, in

terms of sensitivity for detecting type 1 ROP and the

number of infants requiring screening.

Methods: In this retrospective cohort study, we

reviewed the medical records of all infants who

underwent ROP screening from 2009 to 2018 at a

tertiary hospital in Hong Kong. During this period,

all infants born at gestational age (GA) ≤31 weeks

and 6 days or birth weight (BW) <1501 g (ie, the

UK screening criteria) underwent ROP screening.

We determined the number of infants requiring

screening and the number of type 1 ROP cases that

would have been missed if the US screening criteria

(GA ≤30 weeks & 0 days or BW ≤1500 g) had been

used.

Results: Overall, 796 infants were screened using the

UK screening criteria. If the US screening criteria

had been used, the number of infants requiring

screening would have decreased by 21.1%; all type

1 ROP cases would have been detected (38/38,

100% sensitivity). Of the 168 infants who would not

have been screened using the US screening criteria, only four of them (2.4%) had developed ROP (all maximum stage 1 only).

Conclusion: In our population, the use of the US screening criteria could reduce the number of infants

screened without compromising sensitivity for the

detection of type 1 ROP requiring treatment. We

suggest narrowing the GA criterion for consistency

with the US screening criteria during ROP screening

in Hong Kong.

New knowledge added by this study

- In our population, the use of the United States (US) screening criteria, instead of the United Kingdom (UK) criteria, could reduce the number of infants requiring retinopathy of prematurity (ROP) screening by 21.1%.

- The use of the US screening criteria would have detected 100% of type 1 ROP cases over a 10-year period, compared with the UK screening criteria, indicating that the US screening criteria would not compromise sensitivity for the detection of type 1 ROP requiring treatment in Hong Kong.

- There is a need to consider narrowing the gestational age criterion for consistency with the US screening criteria during ROP screening in Hong Kong.

- A review of published literature indicates that our screening outcomes considerably differ from findings in other Asian countries, suggesting that our results are not generalisable to regions outside of Hong Kong.

Introduction

Retinopathy of prematurity (ROP) is a proliferative

retinal vascular disease that affects premature

infants.1 Infants born at low gestational age (GA)

and/or low birth weight (BW) have a risk of ROP.2

Without timely intervention, severe ROP can

progress to retinal detachment and blindness.

Currently, ROP is one of the leading preventable causes of childhood blindness worldwide.3

Successful management of ROP relies on

appropriate screening for early detection of high-risk

disease, along with prompt treatment to prevent

disease progression and visual loss. The United

Kingdom (UK) Guidelines (published in 2008 by the

Royal College of Paediatrics and Child Health, the

Royal College of Ophthalmologists, and the British Association of Perinatal Medicine) recommend

that all infants born at GA ≤31 weeks and 6 days or

BW <1501 g undergo ROP screening.4 On the other

hand, the United States (US) Guidelines (published

in 2013 and 2018 by the American Academy of

Pediatrics, American Academy of Ophthalmology,

and American Association for Pediatric

Ophthalmology and Strabismus) use narrower

criteria; they recommend that all infants born at GA

≤30 weeks and 0 days or BW ≤1500 g undergo ROP

screening.5 6

In Hong Kong, many hospitals use the UK

screening criteria to guide ROP detection.7 8 9

Although the UK screening criteria are appropriate

for ROP detection in many countries,10 11 12 they are

not universally appropriate.13 14 15 16 17 18 In India14 15 19 and

China,17 18 some infants with GA and BW above

the UK screening thresholds also developed severe

ROP requiring treatment. Thus, there is a need to

understand the epidemiology of ROP in Hong Kong

and evaluate the utility of current international

guidelines for ROP detection in Hong Kong infants.

In the Early Treatment for Retinopathy of

Prematurity study,20 type 1 ROP was defined as: (1)

zone I, any stage of ROP, with plus disease; (2) zone

I, stage 3 ROP, without plus disease; or (3) zone II,

stage 2 or 3 ROP, with plus disease. Type 1 ROP

requires treatment.4 6 Although it is important not to miss any infants who develop type 1 ROP requiring

treatment, it is also important to avoid unnecessarily

screening a large number of infants because the

ROP screening procedure is painful and distressful

for premature infants; it can lead to oxygen

desaturation, tachycardia, and apnea.2 21 22 There

is also a need to limit the systemic absorption of

dilating eye drops that may cause adverse events.23 24

An effective strategy would reduce the number of

infants unnecessarily screened without missing any

cases of severe ROP requiring treatment. This study

was conducted to determine whether the UK or the

US screening criteria are more appropriate for Hong

Kong, in terms of sensitivity for detecting type 1

ROP and the number of infants requiring screening.

Methods

Patients

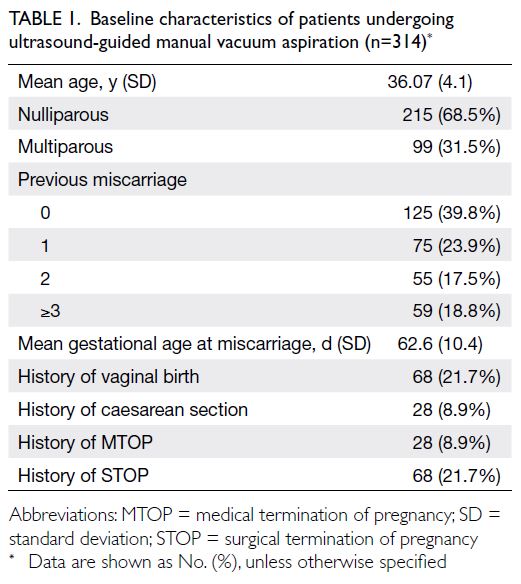

In this retrospective cohort study, we reviewed

the medical records of all premature infants who

underwent ROP screening between 1 January 2009

and 31 December 2018 in Prince of Wales Hospital,

Hong Kong. During the study period, all infants born

at GA ≤31 weeks and 6 days or BW <1501 g (ie, UK

screening criteria) underwent ROP screening. Infants

with GA and BW above the UK screening threshold

who had a high risk of ROP because of an unstable

clinical course also underwent ROP screening at

the request of the attending neonatologist. Analyses

were performed to determine the numbers of ROP

and type 1 ROP cases that would have been detected

and missed if the US screening criteria (GA ≤30

weeks & 0 days or BW ≤1500 g) had been used.

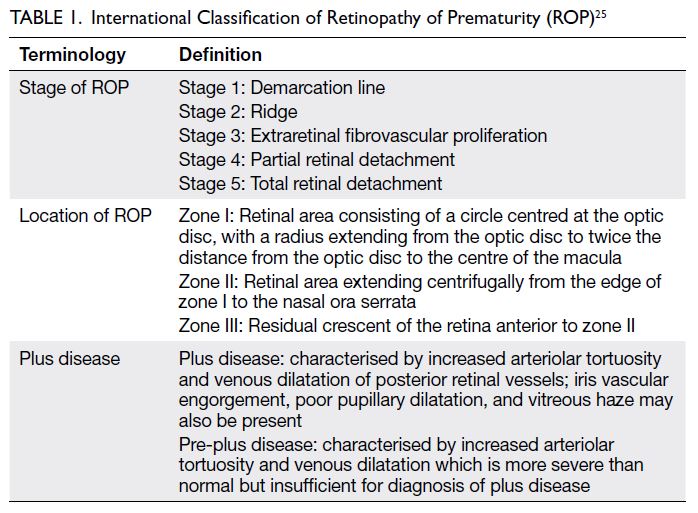

All infants who underwent ROP screening in

Prince of Wales Hospital were included. Infants were

excluded if they died or were transferred to other

institutions before completion of ROP screening

without a known ROP outcome. Data were recorded

concerning GA, BW, most severe ROP stage, any

treatment, and treatment outcome. ROP findings

were classified in accordance with the International

Classification of ROP25 (Table 1). Treatment was

indicated for infants with type 1 ROP. If the ROP

stage differed between eyes in an individual infant,

the more severe ROP stage was used for analysis.

Outcome measures and statistical analysis

The primary outcome measure was the sensitivity

of the US screening criteria, compared with the UK

screening criteria, for detection of type 1 ROP. The

secondary outcome measure was the number of

infants requiring screening.

R software (R version 3.6.1) was used for

statistical analysis. All demographic data were

expressed as medians and interquartile ranges

(IQRs).

Results

Demographic data

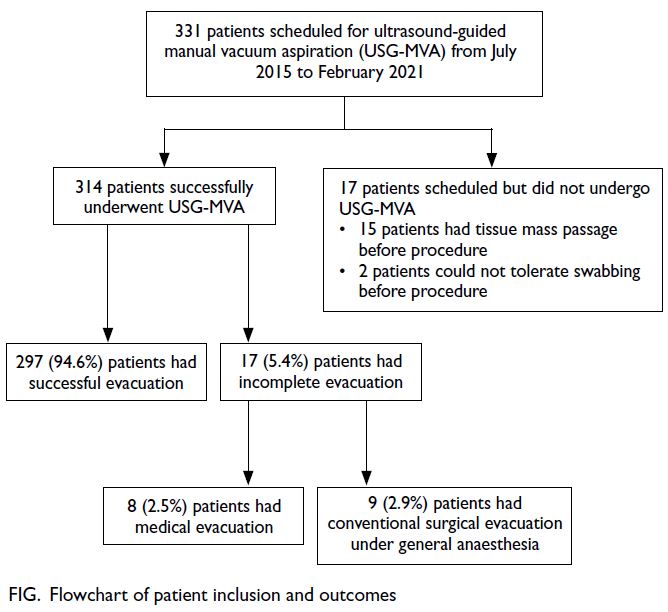

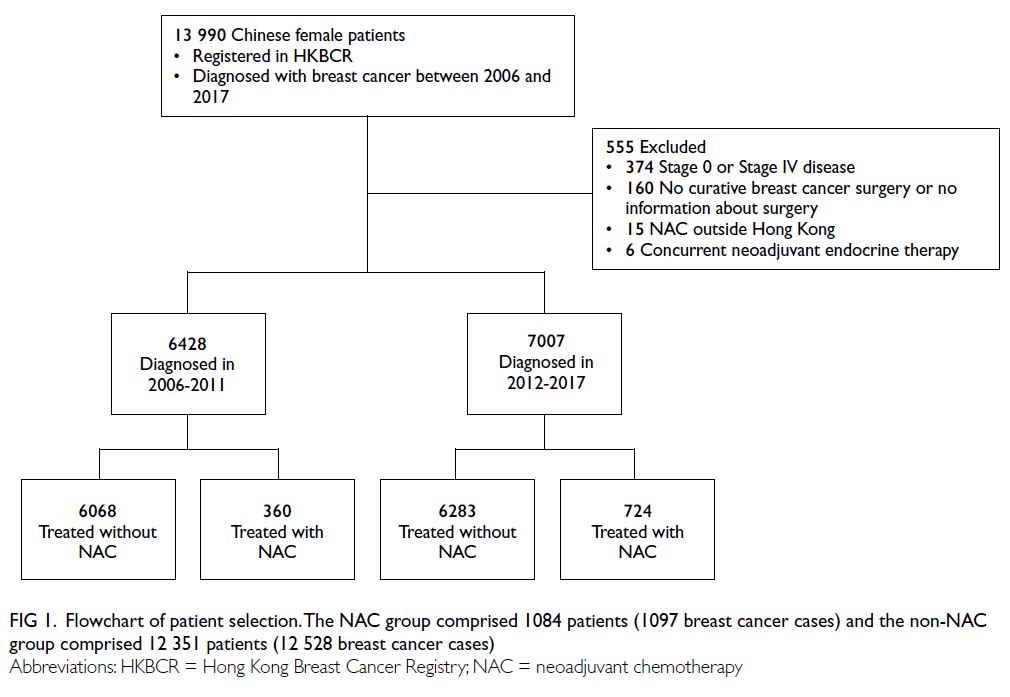

Of the 857 infants who underwent ROP screening

in the study period, 61 were excluded because they

died or were transferred to other hospitals before the

completion of ROP screening. Thus, the remaining

796 infants (404 boys [50.8%] and 392 girls [49.2%])

were included in the study. The median GA was 30

weeks and 2 days (IQR=7 weeks & 3 days; range,

23 weeks & 4 days to 37 weeks & 4 days), and the

median BW was 1320 g (IQR=471; range, 470-2550).

Incidences of retinopathy of prematurity and

type 1 retinopathy of prematurity

In total, 238 infants (29.9%) developed ROP,

including 38 infants (4.8%) who developed type 1

ROP requiring treatment. The median GA and BW

of infants who developed ROP were 27 weeks and

4 days (IQR=3 weeks & 0 days; range, 23 weeks &

4 days to 35 weeks & 5 days) and 943 g (IQR=366;

range, 470-2550), respectively. The median GA and

BW of infants who developed type 1 ROP were 26

weeks and 0.5 days (IQR=2 weeks & 2.5 days; range,

23 weeks & 4 days to 32 weeks & 0 days) and 781 g

(IQR=315; range, 510-1240), respectively. Among

the infants who developed type 1 ROP requiring

treatment, 81.6% were extremely preterm (GA <28

weeks) infants and 100% were extremely low BW

(<1000 g) infants. Of the treated infants, 13 had stage

2 ROP and 25 had stage 3 ROP. No infants had stage

4 or 5 ROP.

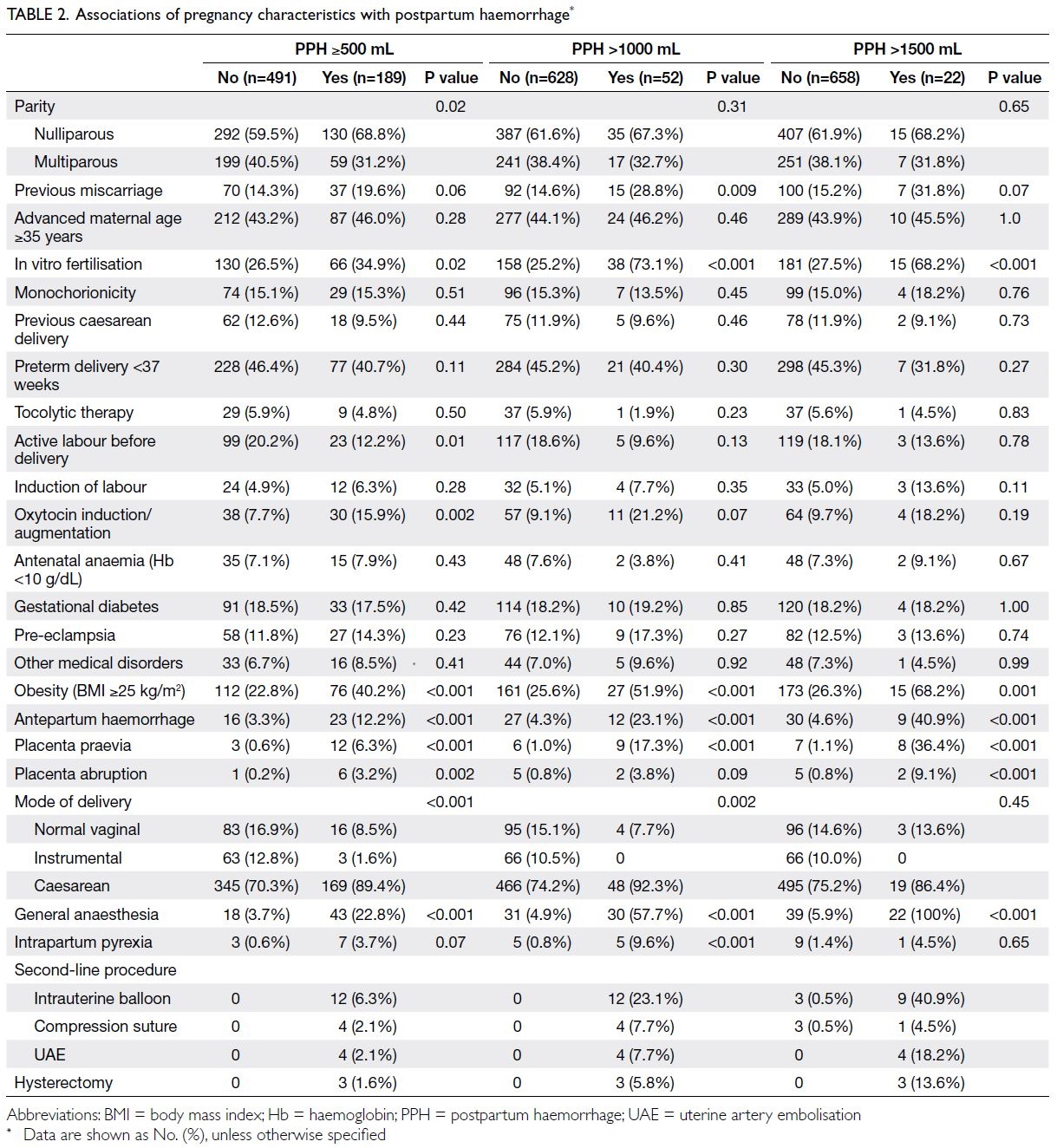

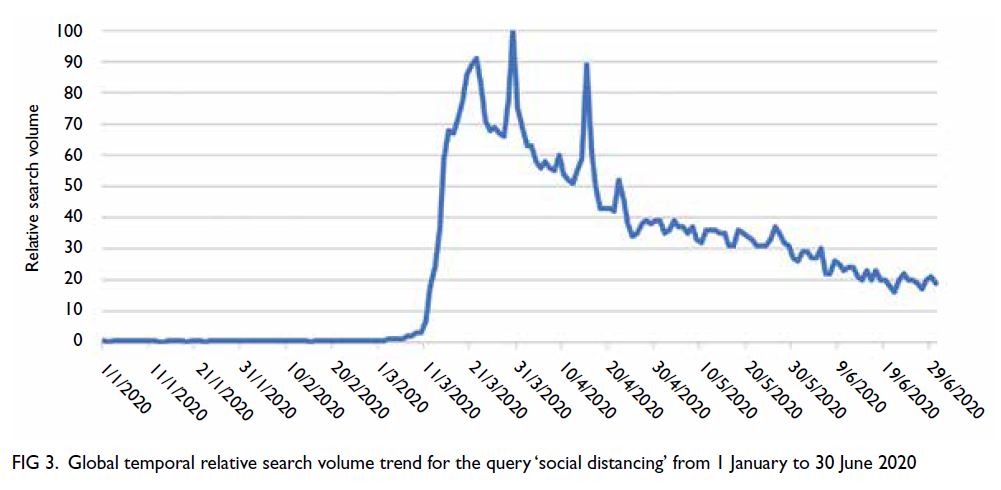

Retinopathy of prematurity cases detected

using the United Kingdom screening criteria

In total, 795 infants underwent ROP screening in

accordance with the UK screening criteria. One

infant had a GA above the UK screening threshold;

however, the infant continued to undergo screening

because he was only 1 day older than the screening

threshold, and the attending neonatologist concluded

that he had a risk of ROP. The UK screening criteria

detected all cases of ROP (n=238) and type 1 ROP

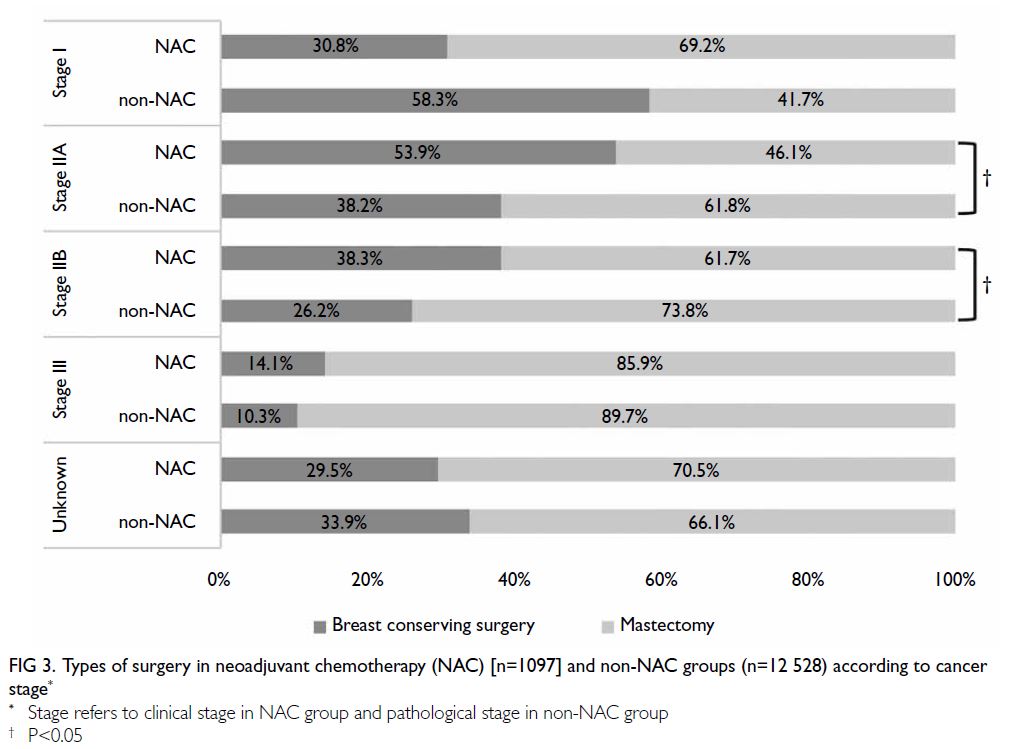

requiring treatment (n=38) [Table 2].

Table 2. Numbers of retinopathy of prematurity (ROP) and type 1 ROP cases detected using the United Kingdom (UK) and the United States (US) screening criteria

Retinopathy of prematurity cases detected

using the United States screening criteria

If the US screening criteria had been used, the

number of infants receiving ROP screening would

have decreased to 627 (21.1% reduction compared

with the UK screening criteria) [Table 2]. The use

of the US screening criteria would have detected

234 cases of ROP (98.3% of cases detected using the

UK criteria, 234/238) and 38 cases of type 1 ROP

(100% of cases detected using the UK criteria, 38/38)

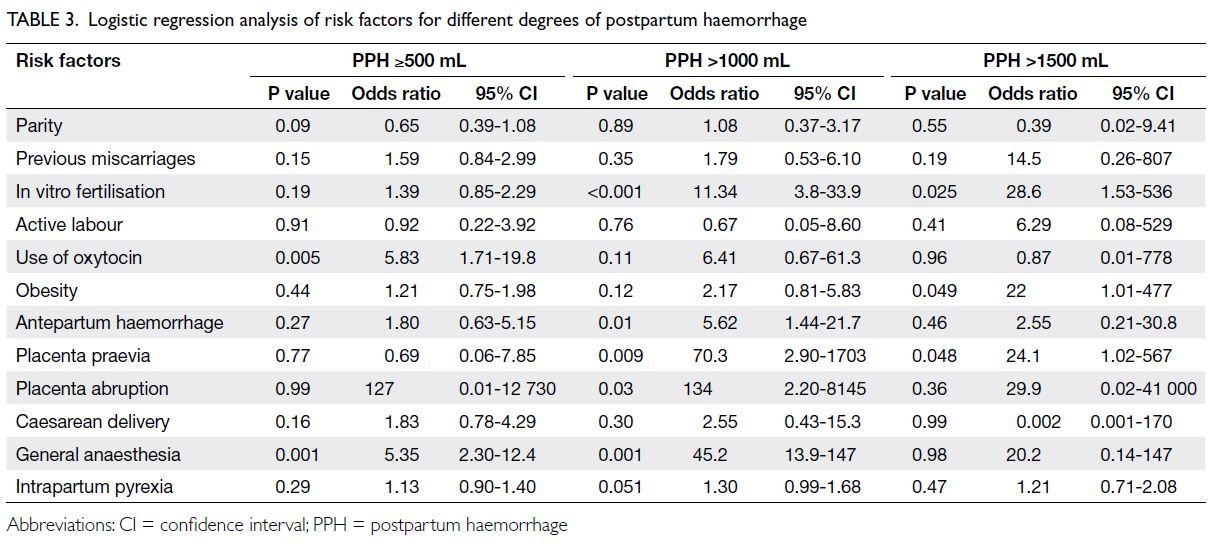

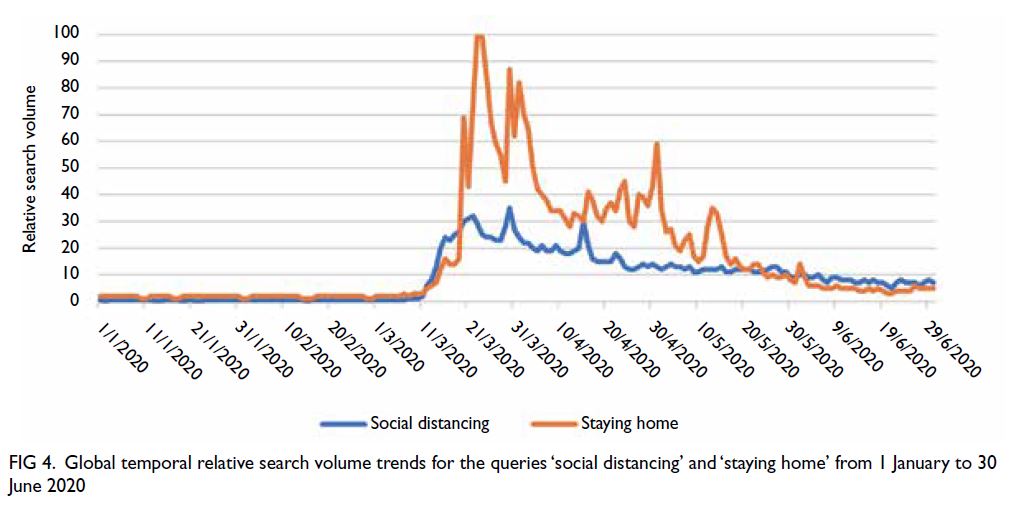

[Table 2]. Of the 168 infants who would not have

been screened using the US screening criteria, only 4 of them (2.4%) had developed ROP (Table 3) and all

cases were mild (maximum stage 1 only); all affected

infants displayed spontaneous resolution of ROP

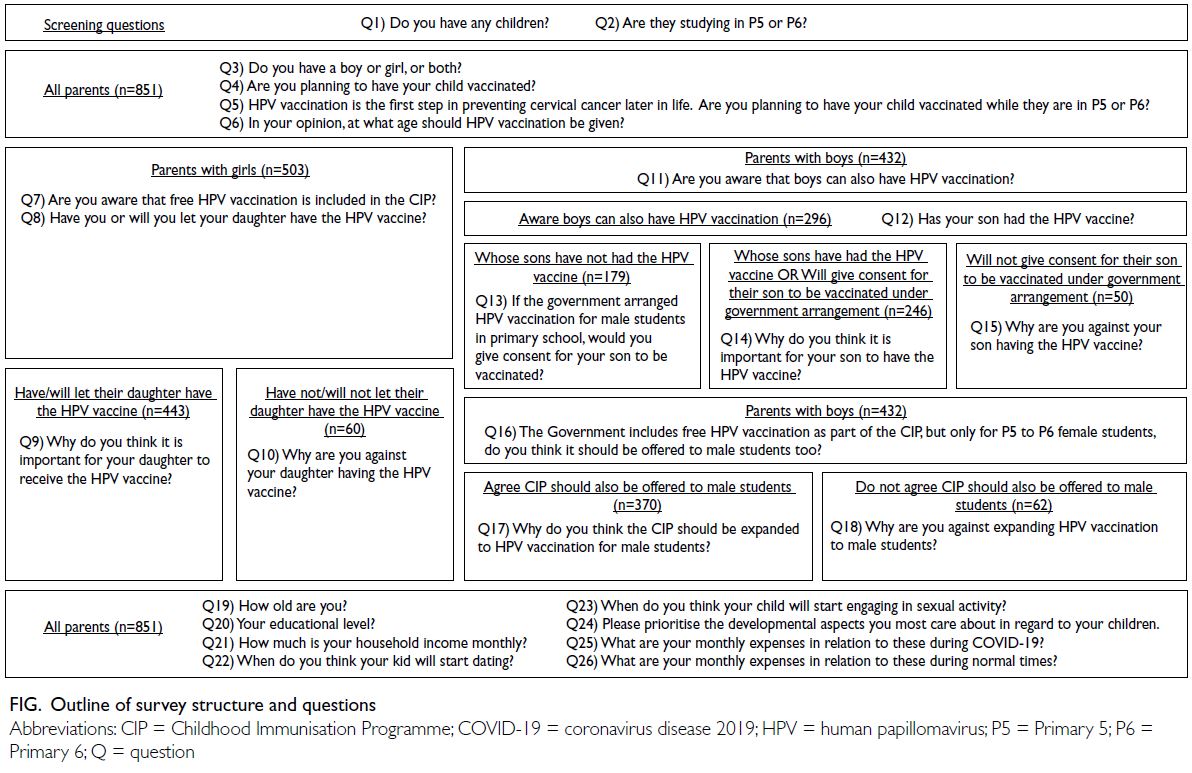

without the need for treatment. No cases of type 1

ROP were missed by the US screening criteria (ie,

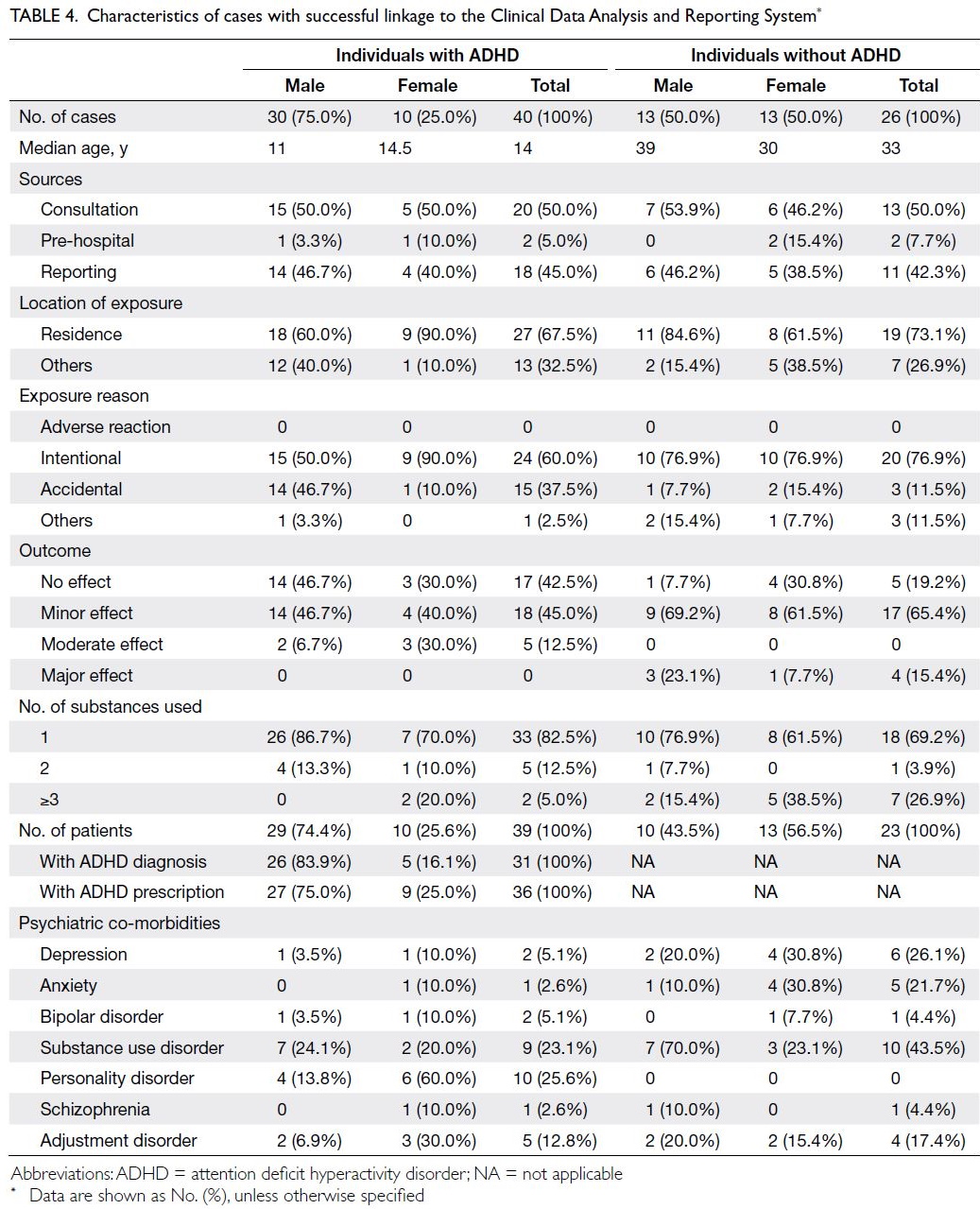

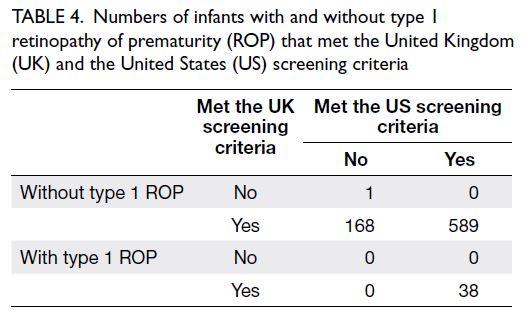

100% sensitivity) [Table 4].

Table 3. Numbers of infants with and without retinopathy of prematurity (ROP) of any severity that met the United Kingdom (UK) and the United States (US) screening criteria

Table 4. Numbers of infants with and without type 1 retinopathy of prematurity (ROP) that met the United Kingdom (UK) and the United States (US) screening criteria

Discussion

This study showed that if the US screening criteria

had been used, instead of the UK screening criteria,

the number of infants screened in our population

would have decreased by 21.1% without missing any

case of type 1 ROP requiring treatment. The number

of ROP cases that would have been missed was very

small (n=4), and all cases were mild (maximum stage

1).

Previous studies showed that many hospitals

in Hong Kong follow the UK screening criteria for

ROP screening7 8 9,26,27; consistent with our findings,

the reported incidences of ROP and type 1 ROP in

Hong Kong were 16% to 28%7 8 9 and 3.4% to 3.8%,7 8 9

respectively. In the present study, type 1 ROP mainly developed in extremely preterm infants with a

median GA of 26 weeks and 0.5 days (IQR=2 weeks

& 2.5 days), suggesting that low GA was an important

predictor of type 1 ROP in our population. Because

the GA criterion is lower in the US screening criteria

(≤30 weeks & 0 days) than in the UK screening

criteria (≤31 weeks & 6 days), the US screening

criteria may be more appropriate for Hong Kong.

Our findings were also consistent with the

results of a study conducted in another hospital in

Hong Kong7; in that study, 12.4% of infants would

not have required ROP screening if the US screening

criteria had been used, rather than the UK criteria,

none of those infants would have developed ROP.

Our results suggest similar outcomes in different

hospitals across Hong Kong.

In a study conducted in Shanghai in mainland

China, the screening thresholds were GA of 34

weeks and BW of 2000 g. The mean GA and BW

of infants requiring ROP treatment were 29.3

weeks (range, 24-35) and 1331 g (range, 750-2550),

respectively17; these infants were more mature and

heavier than the infants in our study. The Shanghai

study showed that 9% of severe ROP cases requiring

treatment would have been missed if the UK

screening criteria were used; 26% would have been

missed if the US screening criteria were used.17

Another study conducted in Beijing in mainland

China showed that 17% of treatment-requiring ROP cases would have been missed if the UK screening

criteria were used; 21% would have been missed if

the US screening criteria were used.18 Therefore,

despite sharing the same Chinese ethnicity, infants

with severe ROP differed in maturity between Hong

Kong and mainland China. This discrepancy could

be the result of variations in comorbidities, perinatal

risk factors, standard of neonatal healthcare, and

level of supplemental oxygen therapy used. Long

oxygen duration, mechanical ventilation, and high

level of supplemental oxygen are known risk factors

for ROP.2 Therefore, the results of our study are not

generalisable to regions outside of Hong Kong.

There is evidence that the UK and the US

screening criteria are not appropriate for many low- and

middle-income countries.15 19 28 29 In North India,

17% of severe ROP cases would have been missed if

the US screening criteria were used; 22% would have

been missed if the UK screening criteria were used.15

In South India, 8% of treatment-requiring ROP cases

would have been missed if the US screening criteria

were used; all of these cases were aggressive posterior

ROP.19 In Saudi Arabia, 35% of infants older than the

UK screening threshold developed ROP; one infant

developed severe ROP (stage 3).28 In Turkey, severe

ROP developed in 3.8% of infants born at ≥32 weeks

and 6.5% of infants born at ≥1500 g.29

Although it is important not to miss any

severe ROP cases, it is also preferable to avoid

missing mild ROP cases because the detection of

early ROP (even mild cases) can influence decisions

regarding systemic management (eg, level of

supplemental oxygen), thereby reducing the rate of

ROP progression. In the present study, only four

cases of mild ROP would have been missed by the

US screening criteria; this number was very small,

compared with the 168 infants (21.1%) who could

have been excluded from screening. The number of

screened infants required to detect one additional

case of ROP was 42 (ie, 168/4). Considering that

few mild ROP cases were missed in exchange for

the exclusion of a large number of infants from

screening, we conclude that it is acceptable and

appropriate to use the US screening criteria for ROP

screening in Hong Kong.

Benefits from reduction in number of

retinopathy of prematurity screening

There are several benefits to reducing the number

of infants screened without compromising the

detection of severe ROP. First, this modified

approach minimises unnecessary stress and the

potential for ROP screening-related adverse events

among infants. Previous studies revealed significant

elevation of blood pressure, increase in pulse rate,

and decrease in oxygen saturation, which persisted

after ROP screening.30 A significant increase in the

number of apnoea events was also observed after screening.31 Approximately half of infants develop

bradycardia from the oculocardiac reflex caused by

scleral depression during screening.32 Second, this

modified approach can reduce hospital expenses. The

estimated cost of ROP screening is approximately

US$230 per infant in the US33 and US$198.9 per

infant in India.34 Third, the approach can reduce

the length of hospitalisation related to delays in

the completion of ROP screening.35 Finally, it may

minimise unnecessary parental stress and anxiety.

For example, one study showed that parents of

infants undergoing ROP screening had significantly

higher anxiety and depression scores compared with

the general population.36

In recent decades, several ROP prediction

models have been developed to improve screening

sensitivity and specificity, including WINROP,37,38

ROPScore,39 CHOP ROP,40 41 CO-ROP,42 STEP-ROP,

43 and G-ROP.44 45 However, these prediction

models have many limitations. First, they require the

collection of postnatal data such as postnatal weight

gain and insulin-like growth factor 1 level, which

may not be available to ophthalmologists. Second,

the mechanisms by which these predictive factors

would interact to affect ROP outcome are not fully

understood. Third, these models were all derived

from Western countries and may not be appropriate

for Asian populations.46 Finally, none of these models

have been validated in Hong Kong. Considering our

findings in the present study, we suggest narrowing

the GA screening criterion to ≤30 weeks and 0 days,

consistent with the US screening criteria; this simple

and straightforward approach avoids the need for

calculations required by prediction models.

Limitations

This study had several limitations. First, its

retrospective design hindered the assessment of

other risk factors (eg, supplemental oxygen level

and comorbidities) that may affect ROP outcomes.

Second, because of the retrospective design, we

could not determine whether the use of a narrower

GA screening criterion would reduce the number

of screenings in real-world clinical practice. A

prospective cohort study is needed to confirm our

findings. Third, although the G-ROP screening

criteria are more sensitive and specific than the

current US screening criteria for populations in

the US,44 45 we could not evaluate the suitability of

G-ROP criteria in our population because we lacked

data concerning postnatal weight gain. Finally, data

were missing regarding infants who died or were

transferred to other hospitals without a known ROP

outcome. Despite these limitations, our findings are

robust because the present study revealed consistent

results when the same screening practices were

applied to a large number of infants over a study

period of 10 years.

Conclusion

Compared with the UK screening criteria, the US

screening criteria appeared to be more appropriate

for our population because they could greatly

reduce the number of infants screened without

compromising sensitivity for the detection of type 1

ROP. Thus, we suggest narrowing the GA criterion

for consistency with the US screening criteria during

ROP screening in Hong Kong. A prospective cohort

study is needed to further explore the impact of

changes to the screening criteria.

Author contributions

Concept or design: LPL Iu, WWK Yip.

Acquisition of data: LPL Iu, LTY Cheung, THM Wu.

Analysis or interpretation of data: LPL Iu, WWK Yip, JYC Lok.

Drafting of the manuscript: LPL Iu.

Critical revision of the manuscript for important intellectual content: WWK Yip, JYC Lok, M Ho, AL Young.

Acquisition of data: LPL Iu, LTY Cheung, THM Wu.

Analysis or interpretation of data: LPL Iu, WWK Yip, JYC Lok.

Drafting of the manuscript: LPL Iu.

Critical revision of the manuscript for important intellectual content: WWK Yip, JYC Lok, M Ho, AL Young.

All authors had full access to the data, contributed to the study, approved the final version for publication, and take responsibility for its accuracy and integrity.

Conflicts of interest

All authors have disclosed no conflicts of interest.

Funding/support

This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

Ethics approval

This research was approved by the Joint Chinese University of Hong Kong–New Territories East Cluster Clinical Research

Ethics Committee (Ref No.: 2020.176) and was performed in

accordance with the tenets of the Declaration of Helsinki. A

waiver of obtaining patient consent has been approved by the

Research Ethics Committee for this retrospective study.

References

1. Hartnett ME, Penn JS. Mechanisms and management of retinopathy of prematurity. N Engl J Med 2012;367:2515-26. Crossref

2. Kim SJ, Port AD, Swan R, Campbell JP, Chan RV, Chiang MF. Retinopathy of prematurity: a review of risk factors and their clinical significance. Surv Ophthalmol 2018;63:618-37. Crossref

3. Solebo AL, Teoh L, Rahi J. Epidemiology of blindness in children. Arch Dis Child 2017;102:853-7. Crossref

4. Wilkinson AR, Haines L, Head K, Fielder AR. UK retinopathy of prematurity guideline. Early Hum Dev 2008;84:71-4. Crossref

5. Fierson WM; American Academy of Pediatrics Section on Ophthalmology; American Academy of Ophthalmology; American Association for Pediatric Ophthalmology and Strabismus; American Association of Certified Orthoptists. Screening examination of premature infants for retinopathy of prematurity. Pediatrics 2013;131:189-95. Crossref

6. Fierson WM; American Academy of Pediatrics Section on Ophthalmology; American Academy of Ophthalmology; American Association for Pediatric Ophthalmology and

Strabismus; American Association of Certified Orthoptists.

Screening examination of premature infants for retinopathy

of prematurity. Pediatrics 2018;142:e20183061. Crossref

7. Iu LP, Lai CH, Fan MC, Wong IY, Lai JS. Screening for

retinopathy of prematurity and treatment outcome in

a tertiary hospital in Hong Kong. Hong Kong Med J

2017;23:41-7. Crossref

8. Yau GS, Lee JW, Tam VT, et al. Incidence and risk factors

of retinopathy of prematurity from 2 neonatal intensive

care units in a Hong Kong Chinese population. Asia Pac J

Ophthalmol (Phila) 2016;5:185-91. Crossref

9. Luk AS, Yip WW, Lok JY, Lau HH, Young AL. Retinopathy

of prematurity: applicability and compliance of guidelines

in Hong Kong. Br J Ophthalmol 2017;101:453-6. Crossref

10. Amer M, Jafri WH, Nizami AM, Shomrani AI, Al-Dabaan AA, Rashid K. Retinopathy of prematurity: are we

missing any infant with retinopathy of prematurity? Br J

Ophthalmol 2012;96:1052-5. Crossref

11. Chaudhry TA, Hashmi FK, Salat MS, et al. Retinopathy of

prematurity: an evaluation of existing screening criteria in

Pakistan. Br J Ophthalmol 2014;98:298-301. Crossref

12. Ugurbas SC, Gulcan H, Canan H, Ankarali H, Torer B, Akova YA. Comparison of UK and US screening criteria

for detection of retinopathy of prematurity in a developing

nation. J AAPOS 2010;14:506-10. Crossref

13. Akman I, Demirel U, Yenice O, Ilerisoy H, Kazokoğlu H,

Ozek E. Screening criteria for retinopathy of prematurity

in developing countries. Eur J Ophthalmol 2010;20:931-7. Crossref

14. Jalali S, Matalia J, Hussain A, Anand R. Modification of

screening criteria for retinopathy of prematurity in India

and other middle-income countries. Am J Ophthalmol

2006;141:966-8. Crossref

15. Vinekar A, Dogra MR, Sangtam T, Narang A, Gupta A.

Retinopathy of prematurity in Asian Indian babies

weighing greater than 1250 grams at birth: ten year data

from a tertiary care center in a developing country. Indian

J Ophthalmol 2007;55:331-6. Crossref

16. Dogra MR, Katoch D, Dogra M. An update on retinopathy

of prematurity (ROP). Indian J Pediatr 2017;84:930-6. Crossref

17. Xu Y, Zhou X, Zhang Q, et al. Screening for retinopathy of

prematurity in China: a neonatal units-based prospective

study. Invest Ophthalmol Vis Sci 2013;54:8229-36. Crossref

18. Chen Y, Li XX, Yin H, et al. Risk factors for retinopathy of

prematurity in six neonatal intensive care units in Beijing,

China. Br J Ophthalmol 2008;92:326-30. Crossref

19. Hungi B, Vinekar A, Datti N, et al. Retinopathy of prematurity in a rural neonatal intensive care unit in South

India—a prospective study. Indian J Pediatr 2012;79:911-5. Crossref

20. Early Treatment for Retinopathy of Prematurity

Cooperative Group. Revised indications for the treatment

of retinopathy of prematurity: results of the early treatment

for retinopathy of prematurity randomized trial. Arch

Ophthalmol 2003;121:1684-94. Crossref

21. Kandasamy Y, Smith R, Wright IM, Hartley L. Pain relief

for premature infants during ophthalmology assessment. J

AAPOS 2011;15:276-80. Crossref

22. Cohen AM, Cook N, Harris MC, Ying GS, Binenbaum G.

The pain response to mydriatic eyedrops in preterm

infants. J Perinatol 2013;33:462-5. Crossref

23. Mitchell A, Hall RW, Erickson SW, Yates C, Lowery S, Hendrickson H. Systemic absorption of cyclopentolate and adverse events after retinopathy of prematurity exams.

Curr Eye Res 2016;41:1601-7. Crossref

24. Alpay A, Canturk Ugurbas S, Aydemir C. Efficiency and

safety of phenylephrine and tropicamide used in premature

retinopathy: a prospective observational study. BMC

Pediatr 2019;19:415. Crossref

25. International Committee for the Classification of

Retinopathy of Prematurity. The International Classification

of Retinopathy of Prematurity revisited. Arch Ophthalmol

2005;123:991-9. Crossref

26. Chow PP, Yip WW, Ho M, Lok JY, Lau HH, Young AL. Trends in the incidence of retinopathy of prematurity over

a 10-year period. Int Ophthalmol 2019;39:903-9. Crossref

27. Yau GS, Lee JW, Tam VT, Liu CC, Chu BC, Yuen CY.

Incidence and risk factors for retinopathy of prematurity in

extreme low birth weight Chinese infants. Int Ophthalmol

2015;35:365-73. Crossref

28. Binkhathlan AA, Almahmoud LA, Saleh MJ, Srungeri S.

Retinopathy of prematurity in Saudi Arabia: incidence, risk

factors, and the applicability of current screening criteria.

Br J Ophthalmol 2008;92:167-9. Crossref

29. Araz-Ersan B, Kir N, Akarcay K, et al. Epidemiological

analysis of retinopathy of prematurity in a referral centre in

Turkey. Br J Ophthalmol 2013;97:15-7. Crossref

30. Jiang JB, Zhang ZW, Zhang JW, Wang YL, Nie C, Luo XQ. Systemic changes and adverse effects induced by retinopathy of prematurity screening. Int J Ophthalmol 2016;9:1148-55.

31. Mitchell AJ, Green A, Jeffs DA, Roberson PK. Physiologic effects of retinopathy of prematurity screening

examinations. Adv Neonatal Care 2011;11:291-7. Crossref

32. Schumacher AC, Ball ML, Arnold AW, Grendahl RL, Winkle RK, Arnold RW. Oculocardiac reflex during ROP

exams. Clin Ophthalmol 2020;14:4263-9. Crossref

33. Yanovitch TL, Siatkowski RM, McCaffree M, Corff KE. Retinopathy of prematurity in infants with birth

weight>or=1250 grams-incidence, severity, and screening

guideline cost-analysis. J AAPOS 2006;10:128-34. Crossref

34. Kelkar J, Kelkar A, Sharma S, Dewani J. A mobile team for screening of retinopathy of prematurity in India: cost-effectiveness, outcomes, and impact assessment. Taiwan J

Ophthalmol 2017;7:155-9. Crossref

35. Zupancic JA, Ying GS, de Alba Campomanes A, Tomlinson LA, Binenbaum G; G-ROP Study Group.

Evaluation of the economic impact of modified screening

criteria for retinopathy of prematurity from the Postnatal

Growth and ROP (G-ROP) study. J Perinatol 2020;40:1100-8. Crossref

36. Xie W, Liang C, Xiang D, Chen F, Wang J. Resilience,

anxiety and depression, coping style, social support

and their correlation in parents of premature infants

undergoing outpatient fundus examination for retinopathy

of prematurity. Psychol Health Med 2021;26:1091-9. Crossref

37. Löfqvist C, Andersson E, Sigurdsson J, et al. Longitudinal postnatal weight and insulin-like growth factor I

measurements in the prediction of retinopathy of

prematurity. Arch Ophthalmol 2006;124:1711-8. Crossref

38. Löfqvist C, Hansen-Pupp I, Andersson E, et al. Validation

of a new retinopathy of prematurity screening method

monitoring longitudinal postnatal weight and insulinlike

growth factor I. Arch Ophthalmol 2009;127:622-7. Crossref

39. Eckert GU, Fortes Filho JB, Maia M, Procianoy RS. A predictive score for retinopathy of prematurity in very low birth weight preterm infants. Eye (Lond) 2012;26:400-6. Crossref

40. Binenbaum G, Ying GS, Quinn GE, et al. A clinical

prediction model to stratify retinopathy of prematurity risk

using postnatal weight gain. Pediatrics 2011;127:e607-14. Crossref

41. Binenbaum G, Ying GS, Quinn GE, et al. The CHOP

postnatal weight gain, birth weight, and gestational age

retinopathy of prematurity risk model. Arch Ophthalmol

2012;130:1560-5. Crossref

42. Cao JH, Wagner BD, McCourt EA, et al. The Colorado-retinopathy of prematurity model (CO-ROP): postnatal weight gain screening algorithm. J AAPOS 2016;20:19-24. Crossref

43. Ricard CA, Dammann CE, Dammann O. Screening tool for early postnatal prediction of retinopathy of prematurity

in preterm newborns (STEP-ROP). Neonatology 2017;112:130-6. Crossref

44. Binenbaum G, Bell EF, Donohue P, et al. Development of

modified screening criteria for retinopathy of prematurity:

primary results from the postnatal growth and retinopathy

of prematurity study. JAMA Ophthalmol 2018;136:1034-40. Crossref

45. Binenbaum G, Tomlinson LA, de Alba Campomanes AG,

et al. Validation of the postnatal growth and retinopathy

of prematurity screening criteria. JAMA Ophthalmol

2019;138:31-7. Crossref

46. Iu LP, Yip WW, Lok JY, Fan MC, Lai CH, Ho M, Young AL.

Prediction model to predict type 1 retinopathy of

prematurity using gestational age and birth weight (PW-ROP).

Br J Ophthalmol 2023;107:1007-11. Crossref