Hong Kong Med J 2025;31:Epub 12 Sep 2025

© Hong Kong Academy of Medicine. CC BY-NC-ND 4.0

ORIGINAL ARTICLE

The scope and impact of original clinical research

by Hong Kong public healthcare professionals

Peter YM Woo, MMedSc, FRCS1,2; Desiree KK Wong, MB, BS1; Queenie HW Wong, MB, ChB1; Calvin KL Leung, MB, BS1; Yuki HK Ip, MB, BS1; Danise M Au, MB, BS1; Tiger SF Shek, MB, ChB1; Bertrand Siu, MB, BS1; Charmaine Cheung, MB, BS1; Kevin Shing, MB, BS1; Anson Wong, MB, ChB1; Yuti Khare, MB, BS1; Omar WK Tsui, MB, BS1; Noel HY Tang, BSc3; KM Kwok, MSc3; MK Chiu, MSc3; YF Lau, MPhil3; Keith HM Wan, MB, BS, FRCS4; WC Leung, MD, FRCOG5

1 Department of Neurosurgery, Kwong Wah Hospital, Hong Kong SAR, China

2 Department of Neurosurgery, Prince of Wales Hospital, Hong Kong SAR, China

3 Centre for Clinical Research and Biostatistics, The Chinese University of Hong Kong, Hong Kong SAR, China

4 Department of Orthopaedics and Traumatology, Kwong Wah Hospital, Hong Kong SAR, China

5 Department of Obstetrics and Gynaecology, Kwong Wah Hospital, Hong Kong SAR, China

Corresponding author: Dr Peter YM Woo (wym307@ha.org.hk)

Abstract

Introduction: This study reviewed the landscape

of clinical research conducted by public hospital

clinicians in Hong Kong. It also explored whether

an association exists between academic productivity

and clinical performance.

Methods: This was a territory-wide retrospective

study of peer-reviewed original clinical research

conducted by clinicians providing acute medical care

at non-university public hospitals between 2016 and

2021. Citations were retrieved from the MEDLINE

biomedical literature database. Scientometric

analysis was performed by collecting journal-level,

article-level, and author-level performance

indicators. Clinical performance was assessed

using crude mortality rate, inpatient hospitalisation

duration, and the number of 30-day unplanned

readmissions.

Results: In total, 3142 peer-reviewed studies were

published, of which 29.3% (n=921) were conducted

by non-university hospital public healthcare

professionals. The most productive specialty was

clinical oncology, with 0.56 articles published per

clinician. The overall mean journal impact factor

and Eigenfactor score were 2.34 ± 3.72 and 0.01 ±

0.07, respectively. At the article level, the mean total

number of citations was 6.33 ± 24.17, the mean Field

Citation Ratio was 3.37 ± 2.04, and the mean Relative

Citation Ratio (RCR) was 0.82 ± 3.32. A significant

negative correlation was observed between crude

mortality rate and RCR (r=-0.63; P=0.022). A

negative correlation was also identified between

30-day readmissions and RCR (r=-0.72; P=0.006).

Conclusion: Clinicians in Hong Kong’s public healthcare system are research-active and have achieved a substantial degree of influence in

their respective fields. Research performance was

correlated with hospital crude mortality rates and

30-day unplanned readmissions.

New knowledge added by this study

- More than 10% clinicians at non-university public hospitals in Hong Kong have engaged in original clinical research as principal investigators.

- In total, 29.3% of clinical research published in Hong Kong was conducted by professionals from non-university public hospitals.

- The quality of the research undertaken was encouraging. All medical specialties achieved a Field Citation Ratio greater than 1.00, indicating that their article citation rates exceeded those of counterparts in the same research field.

- Clinical research activity is correlated with reductions in hospital crude mortality rates and 30-day unplanned readmissions.

- The establishment of a research-supportive infrastructure and dedicated funding for non-university public hospitals may contribute to improved patient outcomes.

Introduction

Clinical research is fundamental to the advancement

of medicine. More than a quarter of a century on,

evidence-based medicine—which began as a nascent

movement in the early 1990s—has revolutionised

healthcare by producing trustworthy observations

that support better-informed clinical decision-making

and health policy.1 2 Research forms the

foundation of evidence-based medicine and

plays an important role in understanding disease,

thereby contributing to the development of novel

therapeutic strategies.3 This contribution has

translated into quantifiable outcomes: participation

in clinical research can lead to significant reductions

in patient mortality and inpatient length of stay

(LOS).4 5 6 7 8 9 Clinical research benefits individual

patients and drives socio-economic growth. The

UK National Institute for Health and Care Research

(NIHR) observed that every 1.0 GBP invested

in clinical trials yielded a return of up to 7.6 GBP

in economic benefit.10 However, a substantial

proportion of frontline clinicians typically do not

engage in research activities relevant to their daily

practice. A cross-sectional survey in North America

revealed that 32% of respondents did not know how

to participate in research.11 A similar study among

Hong Kong family physicians indicated that 27%

had no previous experience.3 Hong Kong is an ideal

location for conducting clinical research due to its world-class universal healthcare infrastructure,

electronic medical records system, use of English

in medical documentation, and the presence of a

pool of internationally reputable investigators.12 13

Additionally, the Hospital Authority (HA)—a

statutory body responsible for managing all public

hospitals in the city—provides more than 90%

of all inpatient bed-days, and the patient follow-up

rate is comparably high.14 Regardless of these

favourable factors, according to the Our Hong

Kong Foundation—a non-governmental, non-profit

public policy institute—the number of clinical trials

conducted in Hong Kong declined by 22% between

2015 and 2021, compared with a mean increase of

48% in developed countries and 285% in Mainland

China.15 No comprehensive review of the clinical

research activity of Hong Kong public healthcare

professionals has been conducted. Apart from

the UK and Spain, no other region has evaluated

the influence of clinician engagement in research

on key performance indicators within a universal

healthcare system.4 5 6 This study was performed to

determine Hong Kong’s research productivity in

terms of peer-reviewed published clinical studies,

its scholarly impact, and its influence on outcomes

for hospitalised patients—including LOS, crude

mortality, and 30-day unplanned readmission. A

comparative analysis of research productivity and

quality across medical disciplines was also performed.

Findings from this review could inform health policy

by providing a stronger foundation for the evidence-based

allocation of resources to support an efficient

and sustainable research ecosystem within the HA.

Methods

This was a territory-wide retrospective observational

study of peer-reviewed original clinical research

conducted by HA medical staff at general acute

care hospitals, in which the staff member served as

principal investigator. The review included articles

published in the biomedical literature from 1 January

2016 to 30 April 2021. Research articles from non-university

institutions—comprising 30% (13/43) of

all HA hospitals—were included. Citations covering

this 5.5-year period were retrieved from MEDLINE,

the United States National Library of Medicine’s

bibliographic database. The database was queried via

the PubMed Advanced Search Builder for all studies

published within the review period, where the first

author’s stated affiliation was a Hong Kong hospital.

The internet-based library search package RISmed

was used to extract author affiliation data from

the PubMed search results into R, an open-source

statistical software tool.16 17 The list of citations was

then manually reviewed to confirm that the study had

been conducted by a clinician from an HA hospital.

Published abstracts were categorised according

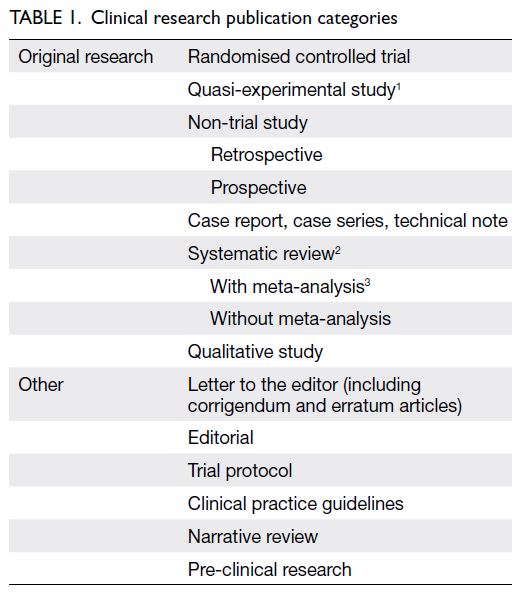

to study design, article type, and corresponding medical specialty (Table 1).18 Systematic reviews

performed in accordance with the PRISMA

(Preferred Reporting Items for Systematic Reviews

and Meta-Analyses) 2020 statement were regarded

as original research.19 20 Articles not subject to peer

review—such as academic conference proceedings,

trial protocols, editorials, letters to the editor, and

erratum or corrigendum statements—were excluded.

Preclinical studies and secondary research articles,

including clinical practice guidelines, position

statements, book chapters, and narrative topical

reviews, were also excluded. Finally, collaborative

studies in which the principal investigator was not

employed by the HA were excluded.

The primary study endpoint was research

productivity, measured by the total number of

original research studies published, with comparisons

made between university- and non–university-affiliated

HA hospitals. The hypothesis was that

university-affiliated hospitals would produce more

original clinical research studies than non-university

hospitals because of their access to tertiary education

institution resources. Secondary endpoints included

research productivity across medical specialties.

To control for workforce discrepancies across

medical disciplines, the mean number of full-time

clinicians for each specialty from 2016 to 2021 was

determined. The number of articles per clinician and

the proportion of the workforce acting as principal

investigator for each specialty were then established.

The quality of the research, as reflected by the

scientometric performance of each published article,

was also assessed. It was hypothesised that research

quality from university-affiliated hospitals would be

superior to that of their non-university counterparts. Another secondary endpoint was patient outcomes

for each non–university-affiliated acute care hospital

from 2016 to 2021: crude mortality rate per 100 000

hospitalised patients, length of inpatient stay, and

annual number of unplanned readmissions within 30

days of discharge. It was hypothesised that increased

research productivity would translate to improved

patient outcomes, and an inter-hospital comparison

of these key performance indicators was performed.

Patient outcome data were collected from the HA’s

Clinical Data Analysis and Reporting System and the

HA Management Information System.

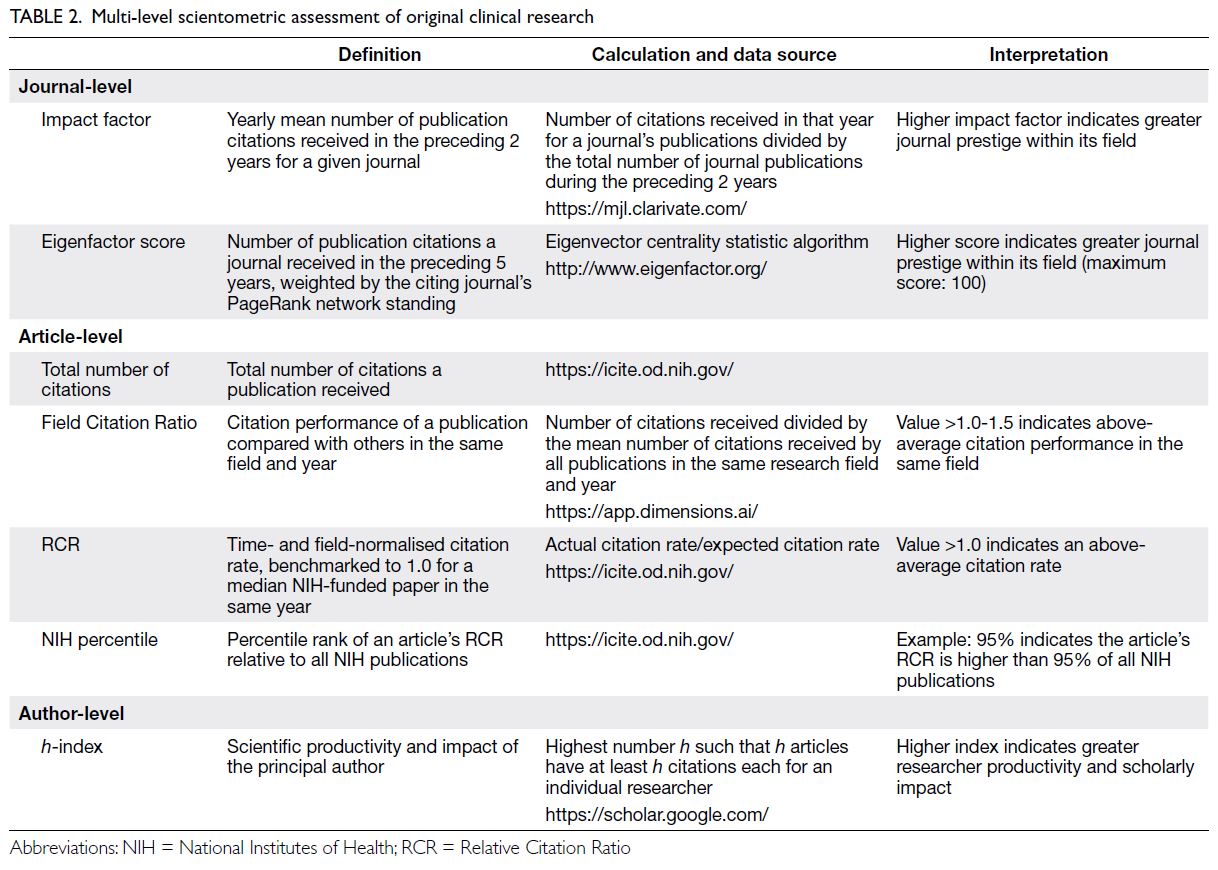

To evaluate research quality, a multi-level

scientometric approach was utilised by collecting

journal-, article-, and individual author-level data. For

journal-level scientometric assessment, two indices

were determined: the journal’s mean impact factor

(IF) and Eigenfactor score (ES) from 2016 to 2021.

These indices were obtained from Clarivate (London,

UK), a bibliometric analytics company that manages

the Science Citation Index, an online indexing

database containing academic journal citation data.1

A journal’s IF is a scientometric index reflecting the

mean number of citations received per article in that

journal during the preceding 2 years.21 This metric

constitutes a reasonable indicator of research quality

for general medical journals.22 The ES ranks journals

using eigenvector centrality statistics to evaluate the

importance of citations within a scholarly network.23

Utilising an algorithm similar to Google’s PageRank

(Alphabet Inc, Mountain View [CA], US), the ES

considers the number of citations received and

the prestige of the citing journal. For article-level

metrics, the total number of citations per article

(TNC), Relative Citation Ratio (RCR), Field Citation

Ratio (FCR), and National Institutes of Health (NIH)

percentile attained were documented (Table 2).

Author-level data were collected by determining the

h-index of the principal investigator (Table 2).24 All

scientometric data were censored on 30 June 2023.

Independent-samples t tests and Chi squared

tests were conducted to compare variables.

Spearman’s rank analysis was performed to assess

correlations between research and hospitalised

patient outcomes. P values of less than 0.05 were

considered statistically significant. All statistical

tests were performed using SPSS (Windows version

21.0; IBM Corp, Armonk [NY], US) and R (version

4.5.0; R Foundation, Vienna, Austria).17

Results

Overall original clinical research productivity

in Hong Kong

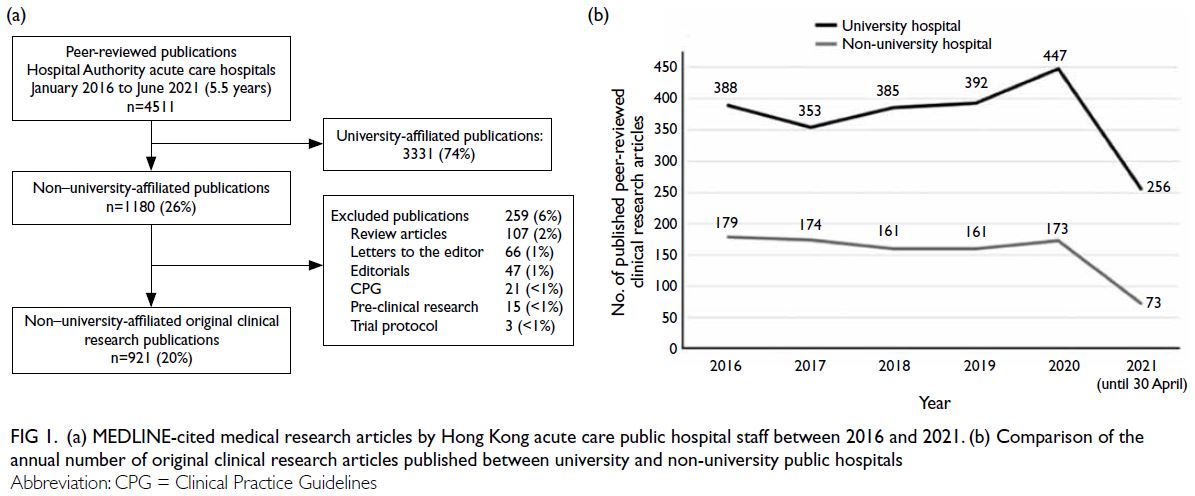

During the 5.5-year period, 4511 peer-reviewed

articles were published by Hong Kong medical

researchers from acute care public hospitals. Of

these, 3142 (69.7%) were original clinical research

studies. In total, 29.3% (n=921) of the articles were authored by non-university hospital investigators—a

significantly smaller proportion than that published by

their university hospital counterparts (independent-samples

t test, P<0.001) [Fig 1a]. Throughout the review period, the annual number of publications

by non-university hospital investigators remained

consistent, with a mean of 167 ± 8 per year (t test,

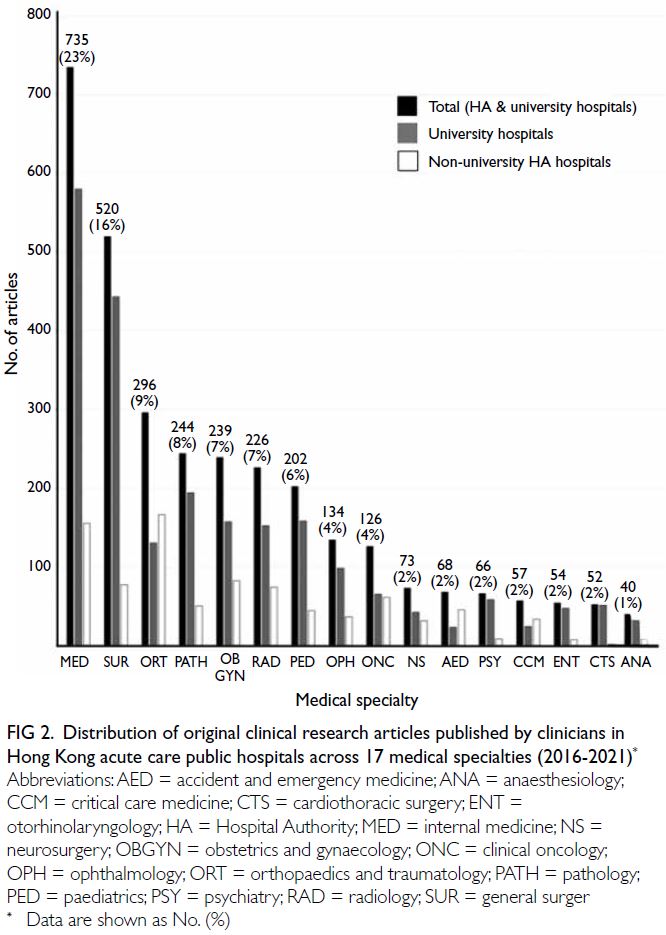

P=0.24) [Fig 1b]. Overall, the medical specialties that produced the most articles were internal medicine,

representing 23.4% (n=735) of published studies, and

general surgery, representing 16.5% (n=520) [Fig 2].

Figure 1. (a) MEDLINE-cited medical research articles by Hong Kong acute care public hospital staff between 2016 and 2021. (b) Comparison of the annual number of original clinical research articles published between university and non-university public hospitals

Figure 2. Distribution of original clinical research articles published by clinicians in Hong Kong acute care public hospitals across 17 medical specialties (2016-2021)

The majority of excluded articles were

narrative reviews that did not meet PRISMA criteria,

followed by letters to the editor and editorials

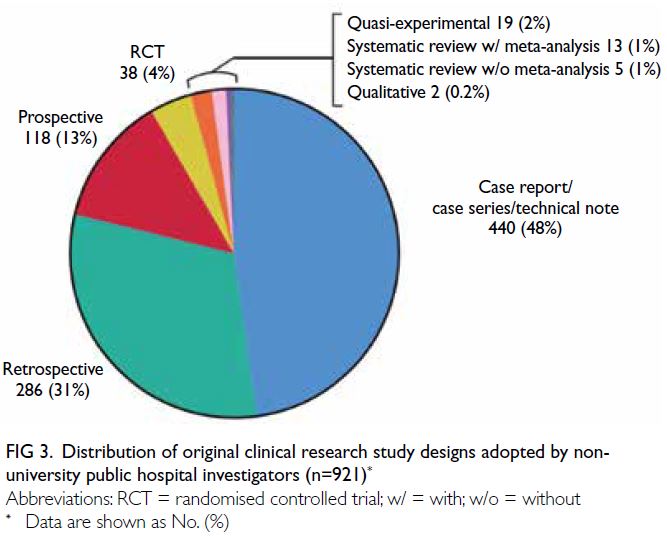

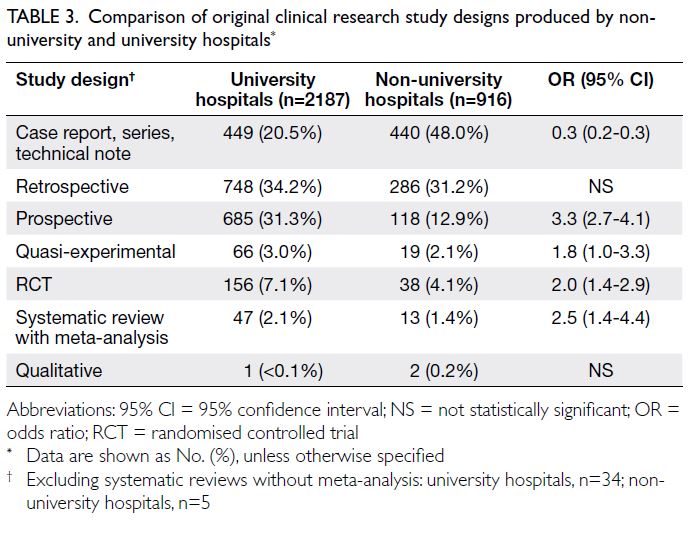

(Fig 1a). Regarding study design, most research

articles were case reports, followed by retrospective

cohort and prospective cohort studies (Fig 3).

A significantly larger proportion of case reports

were published by non-university hospital staff

compared with university hospital staff (P<0.001). In

addition to retrospective studies, university hospital

investigators were significantly more likely to publish

higher level-of-evidence research articles (Table 3).

Figure 3. Distribution of original clinical research study designs adopted by non-university public hospital investigators (n=921)

Table 3. Comparison of original clinical research study designs produced by non-university and university hospitals

Original clinical research productivity among

non-university general acute care hospitals and comparisons between medical specialties

The majority of non-university hospital principal

investigators were clinicians, with 3.7% (n=34) of

studies conducted by nurses or allied healthcare

professionals. Among the 887 articles authored

by clinicians, 544 individuals were identified,

yielding an author-to-article ratio of 1:1.6. These

researchers comprised 10.8% of the 5056 full-time

non-university hospital clinicians employed during

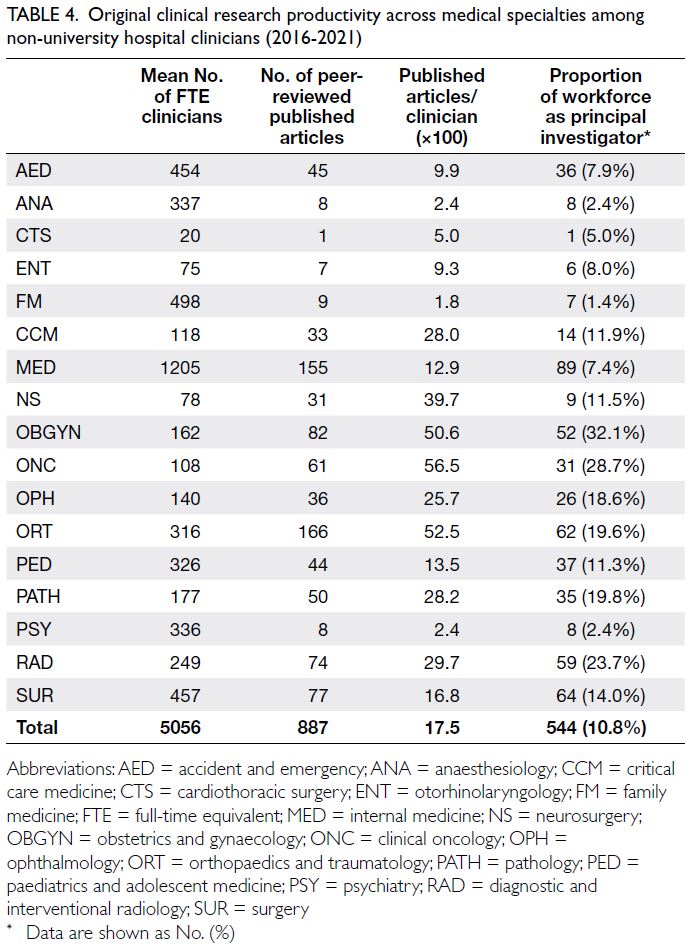

the study period. The most research-productive

specialties among non-university hospitals were

orthopaedics, followed by internal medicine and

obstetrics and gynaecology (Table 4). Among all the

medical specialties, the mean number of articles

per clinician (×100) was 17.5 ± 22.3 (range, 1.8-56.4). After controlling for workforce discrepancies

between disciplines, clinical oncology, orthopaedics

and traumatology, and obstetrics and gynaecology

constituted the most productive specialties in

terms of the mean number of articles published

per clinician (Table 4). Collectively, these three

specialties published significantly more studies

than the other disciplines (independent-samples

t test, P<0.001) [Table 4]. The most research-active

specialty was obstetrics and gynaecology, where one-third

of clinicians acted as principal investigators—this proportion was significantly larger relative to

other medical disciplines (t test, P=0.03) [Table 4].

Table 4. Original clinical research productivity across medical specialties among non-university hospital clinicians (2016-2021)

Original clinical research quality among non-university general acute care hospitals and comparisons between medical specialties

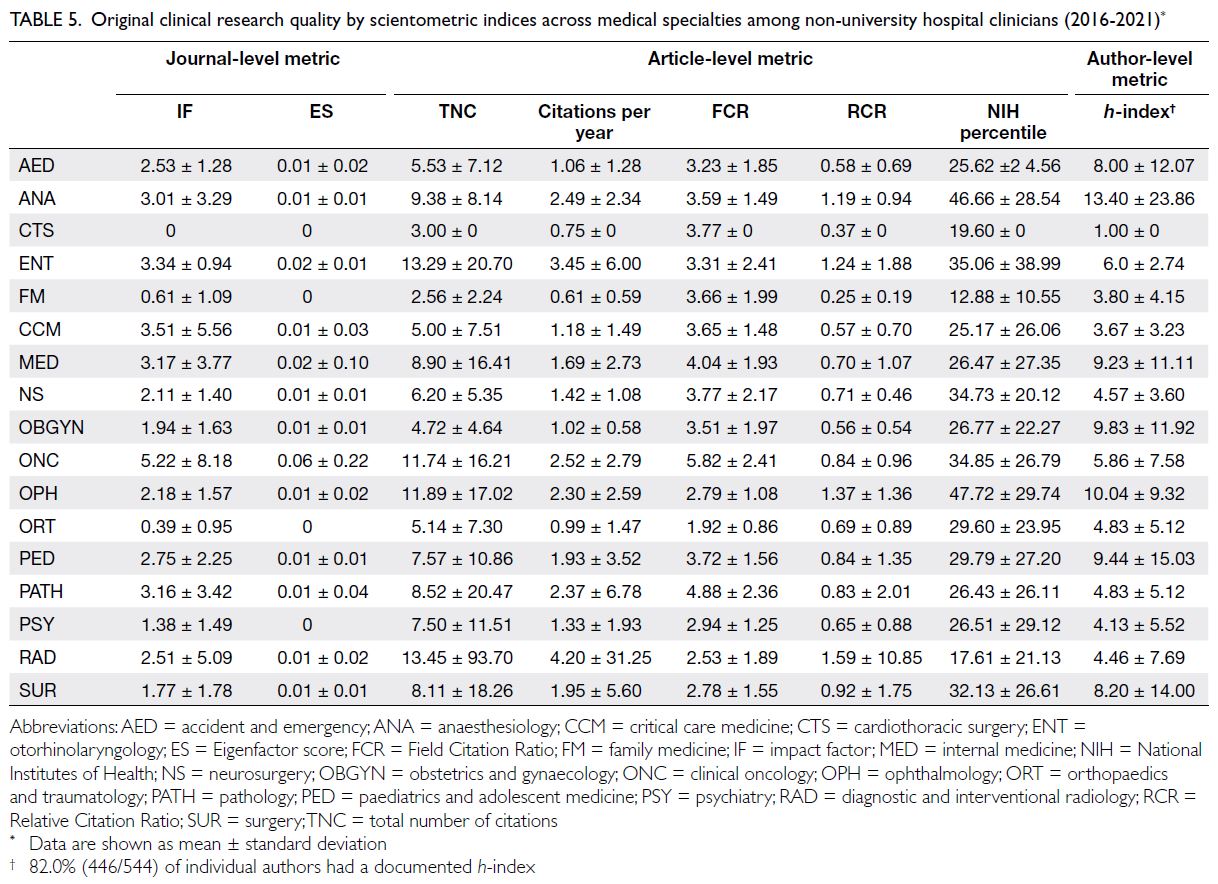

Regarding scientometric performance, the overall

mean journal IF and ES were 2.34 ± 3.72 and

0.01 ± 0.07, respectively. No statistically significant

difference was observed between the principal

investigator’s medical specialty and the journal IF

(independent-samples t test, P=0.31). However, with

respect to the ES, clinical oncologists published

their research in journals with a significantly higher score relative to other medical disciplines (P<0.001) [Table 5].

Table 5. Original clinical research quality by scientometric indices across medical specialties among non-university hospital clinicians (2016-2021)

For studies performed by non-university

clinicians during this period, at the individual

article level, the mean TNC per study was 6.33 ± 24.17, the mean number of citations per year was

1.81 ± 9.52, mean RCR was 0.82 ± 3.32, the mean

FCR was 3.37 ± 2.04, and the mean NIH percentile

achieved was 28.73 ± 25.85. Combined, radiology

and otorhinolaryngology research articles had a

significantly higher TNC per study (P<0.01) and a

higher total number of annual citations (P<0.001)

compared with other medical disciplines (Table 5). Articles in anaesthesiology, ophthalmology,

otorhinolaryngology, and radiology had a mean RCR

exceeding 1.00, indicating that their articles received

a higher citation rate than their co-citation network.

In particular, anaesthesiology and ophthalmology,

studies achieved the highest mean NIH percentile

rankings: their research outperformed 47% of

all NIH-associated publications. Combined,

anaesthesiology and ophthalmology studies also had

a significantly higher NIH percentile ranking than

other medical disciplines (P=0.001). All medical

specialties had an FCR exceeding 1.00, indicating

that their article citation rates were higher than

those of their counterparts in the same research field.

Oncology research had a significantly higher mean

FCR (5.82 ± 2.41) compared with other disciplines

(P<0.001) [Table 5].

In terms of author-level scientometric

performance, 18.0% (98/544) of authors did not

have a documented h-index. The mean h-index

for the remaining researchers was 7.54 ± 10.98.

Anaesthesiologists had a significantly higher

h-index relative to other specialties (independent-samples

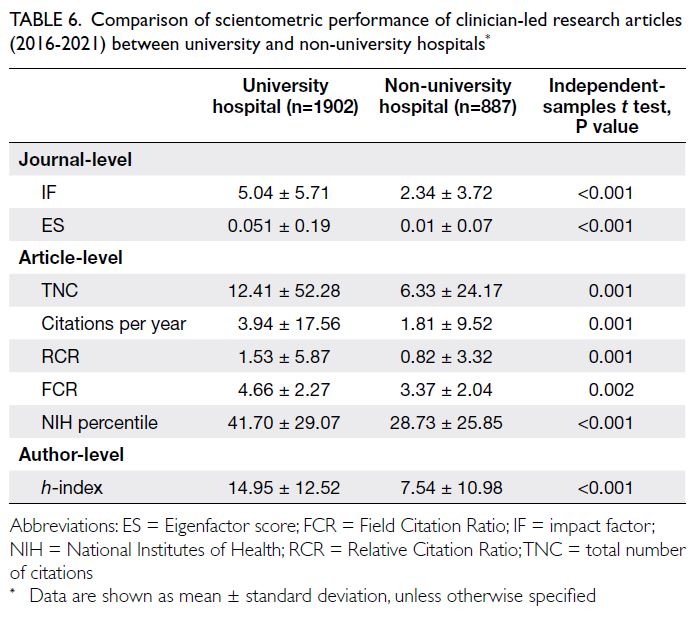

t test, P=0.01) [Table 5]. A comparison of

scientometric outcomes between university and

non-university clinical research also demonstrated

uniformly superior performance by academic

institution investigators (Table 6).

Table 6. Comparison of scientometric performance of clinician-led research articles (2016-2021) between university and non-university hospitals

Original clinical research and patient

outcomes

For non-university-affiliated hospitals, the overall

mean crude mortality rate per 100 000 hospitalised

patients was 27 722 ± 5208. Spearman’s rank

analysis identified significant negative correlations

of mortality rate with TNC (r=-0.69; P=0.01) and

RCR (r=-0.63; P=0.022). The overall annual mean

number of unplanned readmissions within 30 days

of discharge was 1408 ± 756. Similarly, there were

significant negative correlations of readmissions

with TNC (r=-0.76; P=0.02) and RCR (r=-0.72;

P=0.006). The overall mean LOS was 11.7 ± 3.1 days.

No significant correlations between LOS and TNC

(r=-0.32; P=0.29) or LOS and RCR (r=-0.36; P=0.23)

were detected. None of the other scientometric indices were associated with the crude mortality

rate, number of unplanned readmissions, or LOS.

Discussion

This study reviewed the breadth and quality of

original clinical research conducted by Hong Kong’s

public healthcare professionals. It is encouraging to

observe that, despite the heavy workload of frontline

clinicians employed in non-university public

hospitals, more than 10% of the workforce engaged

in original research as principal investigators. Their

endeavours contributed to nearly one-third of peer-reviewed

publications produced in the territory. A

multi-level scientometric approach was adopted to

assess research quality, and our findings indicate

that the studies undertaken met the standards

of their respective fields. Although the IF and ES

values of the published research were not high, all

medical specialties achieved a mean FCR of over

1.00. Notably, anaesthesiology, ophthalmology,

otorhinolaryngology, and radiology articles attained

an RCR exceeding 1.00.

Assessing research impact: the Relative

Citation Ratio

Introduced in 2016, the RCR is a relatively novel

article-level metric that measures a publication’s

relevance within the biomedical literature.24 It

was developed in response to the limitations

of conventional indicators of scientific quality,

such as the IF25 and h-index.26 For example, as

multidisciplinary collaborations have become

more common, researchers in disparate fields

may have unequal access to high-profile journals,

undermining the IF as a reliable reflection of a

study’s performance.21 24 25 Conversely, the h-index

does not consider an author’s total number of

citations and instead reflects cumulative output,

which can disadvantage early-career researchers.

Despite their limitations, the IF25 and h-index26

remain pivotal scientometric indices in decisions

related to funding and career progression. Given

that citations are widely recognised as a form of

acknowledging a researcher’s contribution to the

field, efforts have been made to formalise this

practice into a quantifiable metric. Endorsed by

the NIH, the RCR harnesses an article’s co-citation

network, normalising the number of citations

received according to the article’s publication time

and field of expertise. It is calculated as the ratio of

the article’s actual citation rate—derived from the

FCR—to the expected rate, benchmarked against

NIH-funded publications issued in the same year

and specialty.24 In recent years, the RCR has gained

recognition as a more reliable indicator of an article’s

performance within its peer comparison group and is

increasingly cited in research grant applications.27 28 29

Comparisons with university-affiliated

hospitals

The present study showed that university hospitals

not only outperformed non-university hospitals in

terms of research productivity, but also demonstrated

greater influence across all scientometric outcomes.

In addition to resource consolidation and the

employment of clinician-scientists, another reason

for this discrepancy might be the type of studies

produced. Approximately half of the articles from

non-university hospitals were case reports or

technical notes, which provide a lower level of

evidence in the evidence-based medicine hierarchy

and consequently tend to receive fewer citations.

Nonetheless, this form of research is more accessible

to junior clinicians and can serve as a gateway to

medical writing in resource-limited settings.30 Case

reports offer valuable insights into the real-world

implications of clinical practice—findings that well-designed

randomised controlled trials may fail to

capture. They can also stimulate others to report

similar observations, serving as a hypothesis-generating

opportunity for subsequent systematic enquiry.31

Translating research impact into real-world

patient outcomes

Few studies have tested the hypothesis that research

activity results in improved patient outcomes.4 5 7 8 32

We observed that non-university hospitals whose

staff engaged in clinical research had lower crude

mortality rates and annual 30-day unplanned

readmissions. These findings are supported by

reports that patients treated at hospitals participating

in clinical trials fared better in terms of 30-day

post-intervention mortality and overall survival,

relative to those treated at hospitals without such

arrangements. This trend has been observed for

conditions including acute myocardial infarction,

small-cell lung cancer, colorectal cancer, breast

cancer, and ovarian cancer.5 33 34 35 36 37 The possibility of

a trial effect was reinforced by a systematic review

of 13 studies, which attributed this phenomenon to

healthcare providers’ greater adherence to clinical

practice guidelines and their inclination to adopt

evidence-based practices.8 A subsequent systematic

review of 33 studies further demonstrated that

research activity improved healthcare system

performance—reflected by reductions in LOS and

risk-adjusted mortality, as well as improvements in

patient satisfaction.9 In contrast, few studies have

quantitatively analysed peer-reviewed scientometric

data and its relationship with patient outcomes. For

specific disease conditions, a negative correlation

was observed between acute myocardial infarction–related risk-adjusted mortality and a weighted

citations ratio among 50 Spanish hospitals.7 A

review of 147 National Health Service trusts in the

UK demonstrated a negative correlation between the

number of research article citations per admission

and standardised mortality ratios.5 Econometric

modelling using data from 189 Spanish hospitals

detected a significant reduction in LOS among

institutions that published more clinical research

articles or had a higher TNC per article.6

Encouraging public hospital healthcare

professionals to become principal investigators

There is increasing evidence that clinical research

engagement improves patient outcomes, but several

barriers to participation remain. First, clinicians

have demanding responsibilities that often prohibit

involvement in this time-consuming and resource-intensive

activity.38 39 40 Clinicians are under-recognised

for their overtime efforts—when such work is

typically undertaken—and are overburdened with

administrative procedures. Research-supportive

policies that provide protected time or incentivise clinicians through career advancement could help

foster a more scholastic environment.40 Second,

Hong Kong has a lengthy and duplicative clinical

trial approval process. In a survey of 250 clinician-researchers,

90% reported that approval for a phase I

first-in-human study certificate from the Hong Kong

SAR Government’s Department of Health required

over 3 months.15 Additionally, for HA Clinical

Research Ethics Committee study approvals, 50%

of respondents reported that the process typically

lasted more than 3 months, whereas multi-centre

trials frequently required over a year to begin

recruitment.15 The establishment of a primary review

authority for investigative drug registration—similar

to the United States Food and Drug Administration,

European Medicines Agency, or China’s National

Medical Products Administration—could help

streamline regulatory pathways. Third, most funding

agencies favour academician-led research over

community clinician-led efforts.38 For example, the

existing Hong Kong SAR Government’s Health and

Medical Research Fund and the Health Care and

Promotion Fund—with a combined annual budget

of US$530 million—have primarily been allocated

to academicians with access to robust research

infrastructure. The lack of financial support for

community hospitals to develop research capabilities

can have clinical implications.4 38 39 40 A review of

funding allocations from the NIHR revealed that

National Health Service trusts receiving relatively

lower levels of research funding had higher

risk-adjusted mortality.4 A survey of healthcare

professionals in Ontario, Canada, showed that 46%

were dissatisfied with their research involvement,

although 83% agreed it benefited their careers.39 The

major barriers identified were a lack of mentorship

and institutional stewardship.39 The establishment of

a clinical research institute and academy dedicated

to supporting early-career clinician-scientists

could help address these challenges.15 Modelled

after the NIHR, the provision of publicly funded

administrative services to accelerate translational

research—by facilitating grant applications for non–university-affiliated hospitals, offering biostatistical

support, training research support staff, and

nurturing partnerships in a multi-stakeholder

ecosystem—can be transformative.40 Following the

introduction of NIHR services, there was a tenfold

rise in publications, accompanied by a significant

increase in mean citation ratios.41 A survey of NIHR

stakeholders—including clinicians, nurses, and allied

health professionals—also revealed that its training

programmes enhanced their research capacity and

strengthened individual career development.42

Limitations

This study had several limitations. First, we retrieved studies only from the MEDLINE database and not

from other sources such as Scopus, Web of Science,

Google Scholar, PsycINFO (psychology), CINAHL

(nursing and allied health), or HMIC (healthcare

management, administration, and policy). MEDLINE

was selected because it is the only freely accessible

primary source for interrogating the biomedical

literature without requiring an institutional user

account. While MEDLINE focuses primarily

on medicine and the biomedical sciences, other

databases cover broader disciplines. Inclusion of

these databases would have been ideal, but resource

constraints prevented manual review for relevance.

Second, a comparison of patient outcomes between

university and non-university hospitals was not

performed as we were unable to determine whether

the principal investigator at teaching hospitals was

HA-employed or university-affiliated. Third, only

crude mortality rates and LOS were evaluated. A

more comprehensive review of public healthcare

system key performance indicators—such as risk-adjusted

or standardised mortality rates, symptom-to-intervention durations, incremental cost-effectiveness

ratios, and patient satisfaction survey

results—would have provided greater insight if

such data were available.6 43 Important confounding

factors were also not assessed, including each

hospital’s annual operational income; differences

in catchment population size and demographics;

and variations in the scope of acute clinical services

provided. For example, some institutions are

recognised as level-one trauma centres or infectious

disease centres. Finally, clinical research from

specialties such as psychiatry and family medicine

was likely under-represented, as most clinicians in

these fields work in dedicated psychiatric hospitals

or general outpatient clinics, which are outside the

scope of this study focused on general acute care

hospitals.

Conclusion

This study revealed that clinicians in Hong Kong’s

public healthcare system produced nearly one-third

of the original peer-reviewed clinical research

articles published from the territory. Although

the majority of these articles were case reports or

retrospective studies, they achieved a relatively high

degree of research influence within their respective

medical specialties. Research productivity appears

to be associated with improved patient outcomes,

particularly in terms of crude mortality rates and

30-day unplanned readmissions. Future studies

using more refined key performance indicator

endpoints and adjustments for confounding factors

are necessary to ascertain whether research-active

institutions consistently deliver better patient

outcomes.

Author contributions

Concept or design: PYM Woo, DKK Wong, YF Lau.

Acquisition of data: PYM Woo, DKK Wong, QHW Wong, CKL Leung, YHK Ip, DM Au, TSF Shek, B Siu, C Cheung, K Shing, A Wong, Y Khare, OWK Tsui, NHY Tang, KM Kwok, MK Chiu.

Analysis or interpretation of data: PYM Woo, DKK Wong.

Drafting of the manuscript: PYM Woo, DKK Wong.

Critical revision of the manuscript for important intellectual content: PYM Woo, DKK Wong, KHM Wan, WC Leung.

Acquisition of data: PYM Woo, DKK Wong, QHW Wong, CKL Leung, YHK Ip, DM Au, TSF Shek, B Siu, C Cheung, K Shing, A Wong, Y Khare, OWK Tsui, NHY Tang, KM Kwok, MK Chiu.

Analysis or interpretation of data: PYM Woo, DKK Wong.

Drafting of the manuscript: PYM Woo, DKK Wong.

Critical revision of the manuscript for important intellectual content: PYM Woo, DKK Wong, KHM Wan, WC Leung.

All authors had full access to the data, contributed to the study, approved the final version for publication, and take responsibility for its accuracy and integrity.

Conflicts of interest

All authors have disclosed no conflicts of interest.

Acknowledgement

The authors thank Kwong Wah Hospital’s Clinical Research

Centre, The Hong Kong Student Association of Neuroscience

and the Hong Kong Olympia Academy for Clinical

Neuroscience Research for providing essential secretarial

support and assisting with data acquisition.

Funding/support

This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

Ethics approval

The research was approved by the Joint Chinese University

of Hong Kong–New Territories East Cluster Clinical Research

Ethics Committee, Hong Kong (Ref No.: 2025.256).

References

1. Djulbegovic B, Guyatt GH. Progress in evidence-based medicine: a quarter century on. Lancet 2017;390:415-23. Crossref

2. Ioannidis JP. Why most clinical research is not useful. PLoS

Med 2016;13:e1002049. Crossref

3. Chin WY, Wong WC, Yu EY. A survey exploration of the

research interests and needs of family doctors in Hong

Kong. Hong Kong Pract 2019;41:29-38.

4. Ozdemir BA, Karthikesalingam A, Sinha S, et al. Research

activity and the association with mortality. PLoS One

2015;10:e0118253. Crossref

5. Bennett WO, Bird JH, Burrows SA, Counter PR, Reddy VM.

Does academic output correlate with better mortality rates

in NHS trusts in England? Public Health 2012;126 Suppl

1:S40-3. Crossref

6. García-Romero A, Escribano Á, Tribó JA. The impact

of health research on length of stay in Spanish public

hospitals. Res Policy 2017;46:591-604. Crossref

7. Pons J, Sais C, Illa C, et al. Is there an association between

the quality of hospitals’ research and their quality of care? J

Health Serv Res Policy 2010;15:204-9. Crossref

8. Clarke M, Loudon K. Effects on patients of their healthcare

practitioner’s or institution’s participation in clinical trials:

a systematic review. Trials 2011;12:16. Crossref

9. Boaz A, Hanney S, Jones T, Soper B. Does the engagement

of clinicians and organisations in research improve

healthcare performance: a three-stage review. BMJ Open 2015;5:e009415. Crossref

10. National Institute for Health and Care Research. NIHR

Annual Report 2022/23. 2024. Available from: https://www.nihr.ac.uk/reports/nihr-annual-report-202223/34501. Accessed 18 Oct 2024.

11. Ciemins EL, Mollis BL, Brant JM, et al. Clinician

engagement in research as a path toward the learning

health system: a regional survey across the northwestern

United States. Health Serv Manage Res 2020;33:33-42. Crossref

12. Cheung BM, Yau HK. Clinical therapeutics in Hong Kong.

Clin Ther 2019;41:592-7. Crossref

13. Sek AC, Cheung NT, Choy KM, et al. A territory-wide

electronic health record—from concept to practicality:

the Hong Kong experience. Stud Health Technol Inform

2007;129:293-6.

14. Kong X, Yang Y, Gao J, et al. Overview of the health care

system in Hong Kong and its referential significance to

mainland China. J Chin Med Assoc 2015;78:569-73. Crossref

15. Our Hong Kong Foundation; Hong Kong Science and

Technology Parks. Developing Hong Kong into Asia’s

leading clinical innovation hub. November 2023.

Available from: https://ourhkfoundation.org.hk/sites/default/files/media/pdf/OHKF_BioTech_Report_2023_EN.pdf . Accessed 25 Oct 2024.

16. Kovalchik S. RISmed: download content from NCBI

Databases. R package version 2.3.0 2021. Available from:

https://CRAN.R-project.org/package=RISmed. Accessed 2 Jan 2023.

17. The R Foundation. R: a language and environment for

statistical computing Vienna, Austria; 2022. Available

from: https://www.R-project.org/. Accessed 2 Jan 2023.

18. Aquilina J, Neves JB, Tran MG. An overview of study

designs. Br J Hosp Med (Lond) 2020;81:1-6. Crossref

19. Page MJ, McKenzie JE, Bossuyt PM, et al. The PRISMA

2020 statement: an updated guideline for reporting

systematic reviews. Syst Rev 2021;10:89. Crossref

20. Krnic Martinic M, Meerpohl JJ, von Elm E, Herrle F,

Marusic A, Puljak L. Attitudes of editors of core

clinical journals about whether systematic reviews are

original research: a mixed-methods study. BMJ Open

2019;9:e029704. Crossref

21. Garfield E. The history and meaning of the journal impact

factor. JAMA 2006;295:90-3. Crossref

22. Suelzer EM, Jackson JL. Measures of impact for journals,

articles, and authors. J Gen Intern Med 2022;37:1593-7. Crossref

23. Bergstrom CT, West JD. Assessing citations with the

Eigenfactor metrics. Neurology 2008;71:1850-1. Crossref

24. Hutchins BI, Yuan X, Anderson JM, Santangelo GM.

Relative Citation Ratio (RCR): a new metric that uses

citation rates to measure influence at the article level. PLoS

Biol 2016;14:e1002541. Crossref

25. Casadevall A, Fang FC. Impacted science: impact is not

importance. mBio 2015;6:e01593-15. Crossref

26. Kreiner G. The slavery of the h-index—measuring the

unmeasurable. Front Hum Neurosci 2016;10:556. Crossref

27. Reddy V, Gupta A, White MD, et al. Assessment of the

NIH-supported relative citation ratio as a measure of

research productivity among 1687 academic neurological

surgeons. J Neurosurg 2020;134:638-45. Crossref

28. Didzbalis CJ, Avery Cohen D, Herzog I, Park J, Weisberger J,

Lee ES. The Relative Citation Ratio: a modern approach

to assessing academic productivity within plastic surgery.

Plast Reconstr Surg Glob Open 2022;10:e4564. Crossref

29. Gupta A, Meeter A, Norin J, Ippolito JA, Beebe KS. The

Relative Citation Ratio (RCR) as a novel bibliometric

among 2511 academic orthopedic surgeons. J Orthop Res

2023;41:1600-6. Crossref

30. Balinska MA, Watts RA. The value of case reports in

democratising evidence from resource-limited settings:

results of an exploratory survey. Health Res Policy Syst

2020;18:84. Crossref

31. Suvvari TK. Are case reports valuable? Exploring their role

in evidence-based medicine and patient care. World J Clin

Cases 2024;12:5452-5. Crossref

32. Selby P, Autier P. The impact of the process of clinical

research on health service outcomes. Ann Oncol 2011;22

Suppl 7:vii5-9. Crossref

33. Majumdar SR, Roe MT, Peterson ED, Chen AY, Gibler WB,

Armstrong PW. Better outcomes for patients treated at

hospitals that participate in clinical trials. Arch Intern Med

2008;168:657-62. Crossref

34. Rich AL, Tata LJ, Free CM, et al. How do patient and

hospital features influence outcomes in small-cell lung

cancer in England? Br J Cancer 2011;105:746-52. Crossref

35. Rochon J, du Bois A. Clinical research in epithelial ovarian

cancer and patients’ outcome. Ann Oncol 2011;22 Suppl

7:vii16-9. Crossref

36. Janni W, Kiechle M, Sommer H, et al. Study participation

improves treatment strategies and individual patient care

in participating centers. Anticancer Res 2006;26:3661-7.

37. Downing A, Morris EJ, Corrigan N, et al. High hospital

research participation and improved colorectal cancer

survival outcomes: a population-based study. Gut

2017;66:89-96. Crossref

38. DiDiodato G, DiDiodato JA, McKee AS. The research

activities of Ontario’s large community acute care hospitals:

a scoping review. BMC Health Serv Res 2017;17:566. Crossref

39. Senecal JB, Metcalfe K, Wilson K, Woldie I, Porter LA.

Barriers to translational research in Windsor Ontario: a

survey of clinical care providers and health researchers. J

Transl Med 2021;19:479. Crossref

40. Gehrke P, Binnie A, Chan SP, et al. Fostering community

hospital research. CMAJ 2019;191:E962-6. Crossref

41. Kiparoglou V, Brown LA, McShane H, Channon KM,

Shah SG. A large National Institute for Health Research

(NIHR) Biomedical Research Centre facilitates impactful

cross-disciplinary and collaborative translational research

publications and research collaboration networks: a

bibliometric evaluation study. J Transl Med 2021;19:483. Crossref

42. Burkinshaw P, Bryant LD, Magee C, et al. Ten years of

NIHR research training: perceptions of the programmes: a

qualitative interview study. BMJ Open 2022;12:e046410. Crossref

43. Jonker L, Fisher SJ, Dagnan D. Patients admitted to more

research-active hospitals have more confidence in staff and

are better informed about their condition and medication:

results from a retrospective cross-sectional study. J Eval

Clin Pract 2020;26:203-8. Crossref